At the recent Australian Moot, a hot, emerging topic was “analytics”. Analytics have the potential to help students, teachers and institutions make better choices that lead to improved learning outcomes. Analytics were not possible in traditional education – they are, however, something a learning management system (LMS) can easily provide, if we can just figure out what they are…

What are analytics?

Analytics is a relatively new term; so new, in fact, that my spell-checker doesn’t recognise the word. It’s a real word, though; it must be as there is a Wikipedia entry. The term has been popularised in the online world by Google who offer analytics about your website so you can see details about your site visitors.

But what does the term analytics mean for education in the context of an LMS? At a panel session during the aforementioned moot, the topic was raised and this was the first question asked – what are analytics? One of the panel members, Alan Arnold from the University of Canberra bravely offered a definition: “Analytics are any piece of information that can help an LMS user do their job.”

“Analytics are any piece of information that can help an LMS user do their job.”

Thinking more deeply about the subject, I propose that LMS analytics are useful calculable quantitative gathered collections of information, based on the activities of users within or around the LMS, presented in forms that allow decisions leading to improved learning outcomes. (Quite a mouthful.) There’s lots of information about a user that can be collected in the LMS. The trick is to tease out and combine the useful bits and present them simply.

So the question is not so much “what are analytics?” but is instead “what analyics do you need?” and perhaps “how can you get them?”.

What analytics do you need?

Not all analytics are relevant to all users. If you are a teacher, you’re probably thinking about getting information that can allow you to teach better. If you’re a policy maker at an institution, you’re probably wanting to know how successful your teachers are with their students. Bet let’s not forget the students as well; there is information in the LMS that can help them also.

On the plane back from the Moot I decided it would be worth starting a catalogue of all the different analytics that could be captured in Moodle. At lunch I threw these at Martin and we cranked out a few more.

Analytics useful to Students

- Progress

With an LMS, it is possible to achieve regular assessment within a course based on a rich set of finely chunked multi-modal activities, and while this can lead to deep learning, it can also be overwhelming for students. It is, therefore, useful for a student to know where they are up to in a course and what they have to do next. Students who use short-term planning tend to be more successful; they just need a quick snapshot of their progress.

With an LMS, it is possible to achieve regular assessment within a course based on a rich set of finely chunked multi-modal activities, and while this can lead to deep learning, it can also be overwhelming for students. It is, therefore, useful for a student to know where they are up to in a course and what they have to do next. Students who use short-term planning tend to be more successful; they just need a quick snapshot of their progress.- Relative success

- Deep learners are more successful and deep learners are characterised by meta-cognition about their learning. Providing analytics about their relative success can allow students to know whether they are on track of if they need further exposure to a topic. Relative success can also be used to introduce a competitive element into a cohort, which some educationalists recommend.

- Opportunities to interact

- If students are studying in isolation, it may not always be apparent when there are chances for them to interact with peers or teachers. Determining the level at which a student is interacting could be seen as an analytic that can be used to direct them to opportunities for communication and collaboration.

Analytics useful to Teachers

- Student participation

- In an online world, it is more difficult for a teacher to know which students are participating and those needing a push. Students can fail to participate for numerous reasons, usually valid ones. Sometimes a student may need to be encouraged to withdraw from a course and re-enrol later. Where analytics can help is in the determination of the timing of when such decisions need to be made. That’s not to say that such information needs to be complex; it could be as simple as “traffic light” coloured icons next to a list of names of students, ordered by risk.

- Student success

- Assuming a student is involved, a teacher also wants to know how successful they are. This could be the product of assessment and views of resources. If students are progressing through the course with unsuccessful results, then they may need to be encouraged to re-expose themselves to a topic within the course before progressing further.

- Student exposures

- Moving away from a course modality where “one size fits all”, it is useful to know how many times a student was exposed to a topic before they were successful. This is a differentiating factor among students in a cohort. If students are progressing with few exposures, perhaps they are finding the course too easy, perhaps even boring, and may need to be challenged further. If students are requiring numerous exposures before they are successful, then perhaps alternate presentations of a topic need to be created to suit the learning preference of particular learners. Such an analytical tool can assist a teacher to deliver learning at an individual level.

- Student difficulty in understanding

- Through an analysis of exposures and assessment results, it may be possible to determine which topics, or areas within a topic, students are finding difficult. This may indicate areas that need to be revisited in the current delivery or enhanced in a future delivery of the course.

- Student difficulty in technical tasks

- When students are undertaking learning, the last thing they want is to be stifled by an inability to express their understanding because of by the way a course is set up within the LMS. Students patterns of use within the LMS may indicate they are having such difficulties, and a teacher can be alerted to take action.

- Feedback attention

- Teachers take time and spend effort creating feedback for students as a reflection of their understanding. It is useful to know which students have paid attention to such feedback, and which students may need to be encouraged to do so. Going beyond this it may be possible to deliver information to a teacher about the effectiveness of their feedback on students’ understandings as reflected in subsequent assessment.

- Course quality

- In several institutions that I know of, part of the measurement of a teacher’s effectiveness is judged by the quality of the courses they are producing within the LMS, based on a set of metrics. Such measurements can be used for promotions and to drive the development of PD activities. If such metrics can be automated, then analytics can be produced for teachers that encourage them to improve their course by increasing the richness of their resources, improving the quality of their activities, including more activities of different kinds, providing more opportunities for students to interact or collaborate.

Analytics useful to Institutions

- Student retention

- Analytics can provide more information about students than simple pass/fail rates. Analytics can help determine when students may be at risk of failing and in which courses this is more likely to happen. Such analytics can help an institution to send resources to where they are needed most and to plan resources for the future.

- Teacher involvement

- There may be ethical implications in monitoring teacher involvement in a course as it is akin to workplace survelance. However there is information in an LMS that can be presented in a useful way in relation to training and promotions. It might also be useful to anonymously tie in a teacher involvement analytic with other analytics to find correlations.

- Teacher success

- As well as looking at success in terms of pass and fail, it may also be possible to determine where teacher interventions have encouraged students to achieve beyond their expected outcomes.

- Relative course quality

- Clearly not all courses are equal, but how do you determine which is better. There have been a number of attempts to manually measure aspects of a course such as accessibility, organisation, goals and objectives, content, opportunities for practice and transfer, and evaluation mechanisms (Criteria for Evaluating the Quality of Online Courses, Clayton R. Wright). If such metrics can be automated, then analytics can be created with can reflect the quality of courses. Such metrics could also be fed back to teachers as an incentive to improve their courses.

How can you get them?

So, you want these analytics, but how can you get them? Some of them may already be accessible via various mechanisms, however I think we still need to work out how best to draw this information together in a simple way for specific users.

Moodle currently logs most actions that take place within the LMS. It is possible to view log reports, but they are limited to interaction in terms of activities within a course.

There are a number of existing plugins and extensions to Moodle that attempt to provide analytics to users. Among these there are a batch of report generators, many of which are quite configurable.

The Configurable reports block plugin is a configurable system that allows reports to be created and used by various roles. It may be a good model to use to start a set of standard analytics reports within an institution.

The Configurable reports block plugin is a configurable system that allows reports to be created and used by various roles. It may be a good model to use to start a set of standard analytics reports within an institution.- The Custom SQL queries report plugin allows an administrator to run any query against the database used by Moodle. It’s clearly flexible, but not something you can put into the hands of all users.

- The Totara LMS is a rebranded, extended version of Moodle. One of the aspects built onto the standard Moodle install is a reporting facility that provides customisable reports to users of different roles.

There are also a number of blocks available and in-the-works that attempt display analytical information to users.

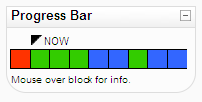

My own Progress Bar block shows a simple view of course progress to students and an overview of student progress to a teacher.

My own Progress Bar block shows a simple view of course progress to students and an overview of student progress to a teacher.- The Engagement analytics block is

currently in development, but I have seen a demo of this and it looks good.now available. The block allows a teacher to specify expected targets for students then presents to teachers simple traffic-light icons next the names of students at risk. - The Course Dedication block estimates the time each student has spent online in a course.

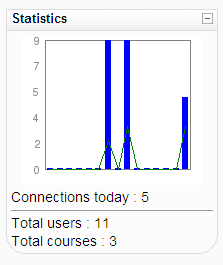

- The Graph Stats block shows overall student activity in a course over time.

Simple queries

A lot of these analytics can already be queried or calculated from the data already stored in the Moodle databse. The Report plugin type is available for presenting pages if information to users and is applicable for analytics. The Block plugin type is available for simple, compact presentation of information. Both of these APIs can present different displays to users with differing roles.

New logging

Currently, most of the logging that takes place in Moodle ends up in a single table. For simple lookups, this is not a problem, but for complex conjunctive queries, working with a large log table can hog the resources of a server. The current system of logging is likely to be a target of future work at Moodle HQ so that both the recording and retrieval of information can be achieved efficiently.

Measurement of a number of the interactions required for the analytics above is not possible using the current log and module tables. Viewing the recording of user interactions from an analytical perspective may lead to new information being captured for later analysis and presentation.

AI or user optimised queries

When you have a wealth of user interaction information available, why stop at the limits of human developers.

- Genetic algorithms, neural networks and other heuristic approaches may reveal newly refined analytics or even new analytics altogether.

- Crowd sourced optimisation of analytics reports may allow a collective intelligence to refine analytics so that they are even more valuable and reliable.

Analysing analytics

Providing analytics allows us to study the impact that analytics can have on the users who view them. This allows general research questions such as “what analytics promoted better learning, teaching or retention?” Also, specific questions can be asked about individual analytics, such as “does feedback attention have an impact on learning outcomes?” Queue the savvy educationalists…