Do we know what teachers want and does it really matter?

Among school IT managers we often talk about providing secure and stable systems. Good IT service can be objectively qualified through ITIL and other approaches. But in an educational setting, understanding the IT needs and desires of users, who are predominantly teachers, is essential. Relationships between IT support staff and teachers can become siloed and confrontational without a strong understanding of what teachers expect from IT. I porposed a model of what teachers want from IT, based on my tacit understanding, and refined this with teachers at various levels of responsibility. This post is the result.

What teachers want doesn’t constitute all that IT professionals need to do in educational institutions. Teachers are not usually aware of planning, testing or maintenance. They do not worry about technical policies, vendor engagement or documentation. Yet all these things are needed and indirectly affect teachers. The reason IT professionals need to understand what teachers want is so that we can work together in a way that makes sense.

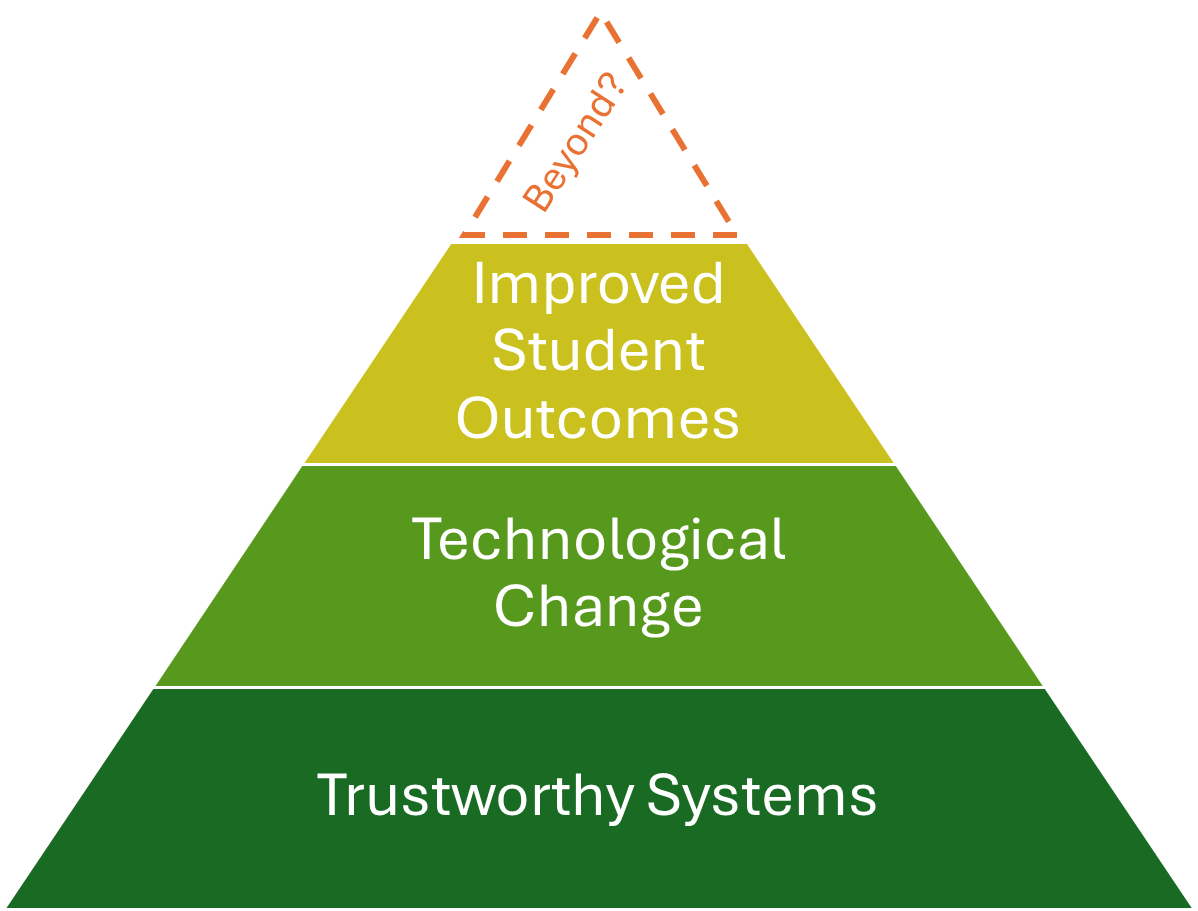

The relationship between teaching staff and an IT Team matures in stages. For example, IT staff may have an ingenious plan to improve student outcomes, but if teachers don’t trust IT systems, like AV in classrooms, they will not cooperate in such projects. Conversely, as IT teams deliver more trustworthy systems and successfully deliver change, teachers will be more likely to seek collaboration with IT staff on projects targeting student success. The following diagram depicts what teachers want in a graduated hierarchical sense. I don’t think teachers necessarily have this model in their mind, but I know that they won’t engage IT staff at higher levels if they are not satisfied with the lower levels first.

Trustworthy Systems

“Trust is earned in drops and lost in buckets.” (Attributed to multiple people)

When IT systems don’t work and this disrupts a teacher’s lesson plans, teachers lose trust in those systems. They will avoid using them or, when they can’t avoid them, they will become frustrated. It doesn’t matter to teachers whether the cause of an IT issue is infrastructure, the failing of the IT Team, an external provider or even the teaching staff themselves. Their focus is that they can’t do their job. If there is a series of issues over a period, not only will teachers distrust IT systems, they will also distrust IT staff to fix issues. They will also be less inclined to report issues, which compounds the problem.

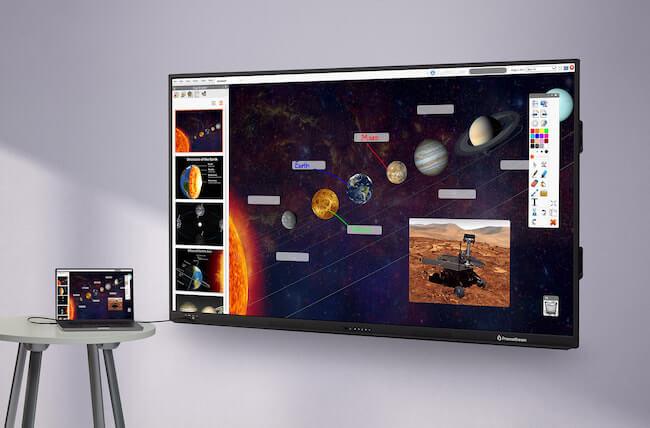

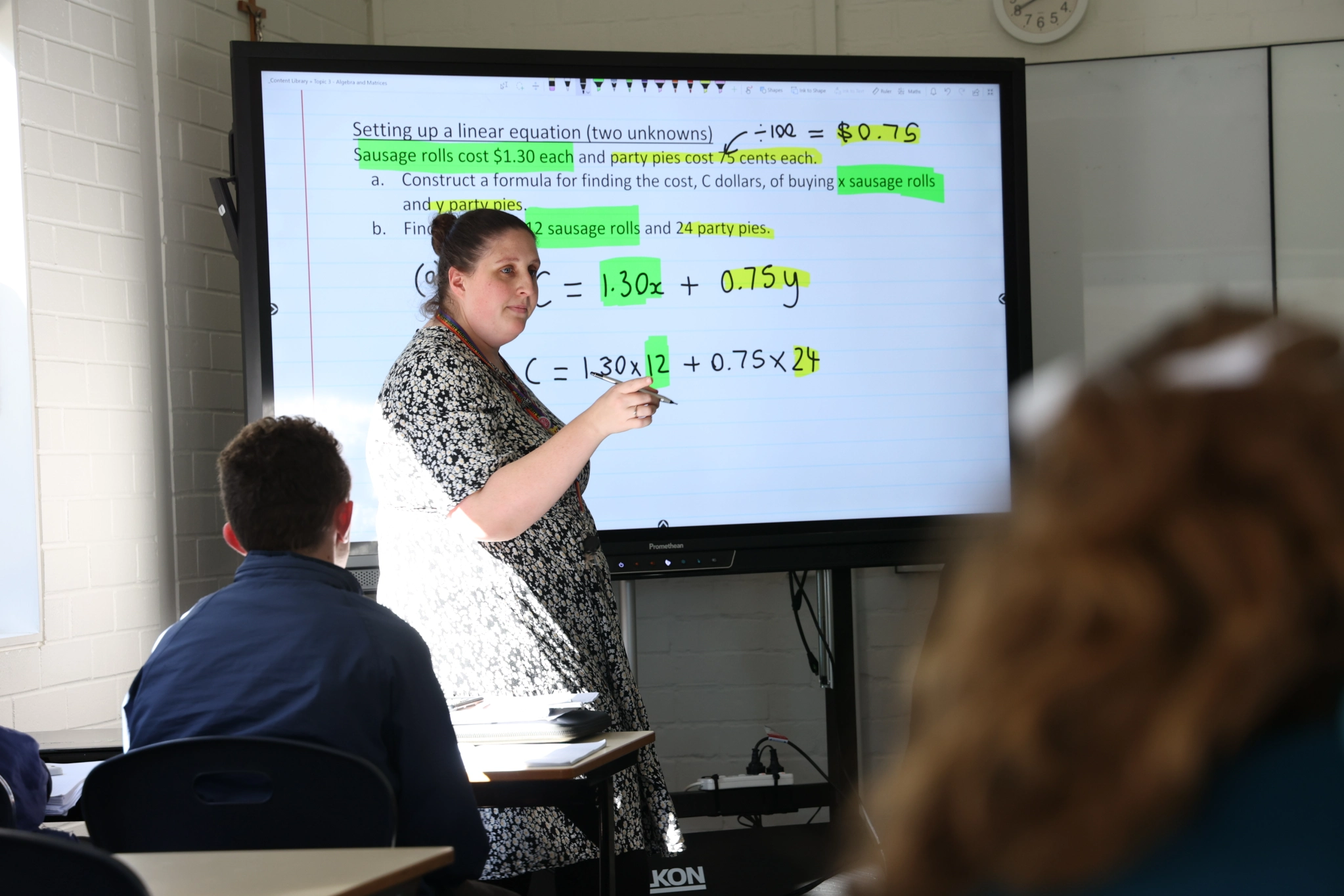

Teaching staff want to walk into a classroom assuming their computer, the network, software and AV facilities will all work so they can deliver teaching. They are not interested in security, backups, system efficiency the latest update; the focus of teachers is student learning.

To improve trust, IT teams can do the following.

- Work on general system stability more than system expansion, and encourage third-party providers to do the same.

- Proactively monitor systems using dashboards and automation to detect problems before they are reported.

- Encourage a culture of reporting among staff by verbally asking them to let you know when they spot issues. Make it easy to make reports. Repeat this regularly at meetings. Thank reporters for taking time to report (especially less confident staff, unsure if the issue is their fault).

- Create a collaboration culture in the IT Team. I use the phrase “working alongside teachers” and encouraging IT staff to think of themselves indirectly as educators. I regularly discourage defensiveness about system issues.

- Get out of the IT Office and into staffrooms, even when there are no problems. This gives staff opportunities to ask for assistance with unreported problems and increases visibility of the IT Team. I attend staff meetings to present IT matters, but also to put a face to the IT Team.

- Work with school leaders to set realistic teacher expectations: IT systems are not going to work 100% of the time. Teachers need to be able to pivot their lesson plans when systems don’t work. Of course, any banked trust very quickly degrades with repeated or continuous outages.

Technological Change

Without trust in systems, teachers (particularly execs) will often try to drive technology change on their own. They may be motivated by a single third-party provider, rather than broad consultation. They may not consider scope, time, quality or communication, which leads to systems that are difficult to implement and integrate. The resulting systems are often ones that other teachers don’t feel they own and may resent using.

When the IT Team lead change without trustworthy systems, they will not have positive relationships they can draw on. Key stakeholders will not volunteer their time and system champions will not emerge.

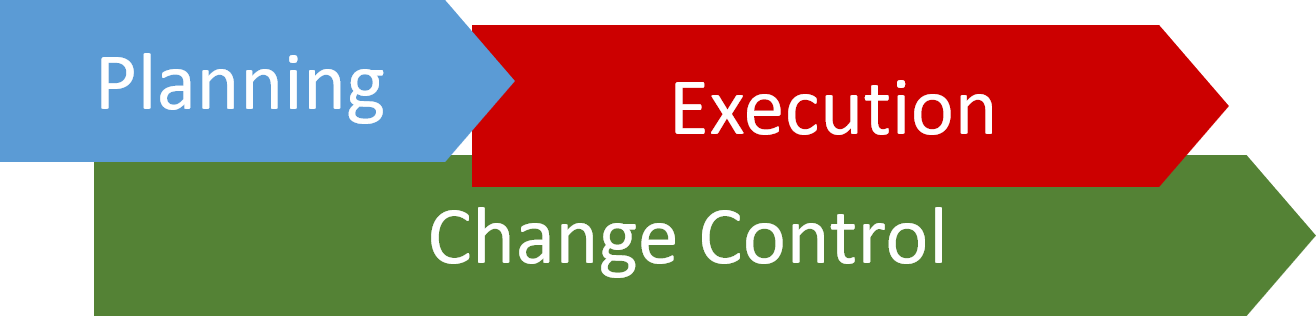

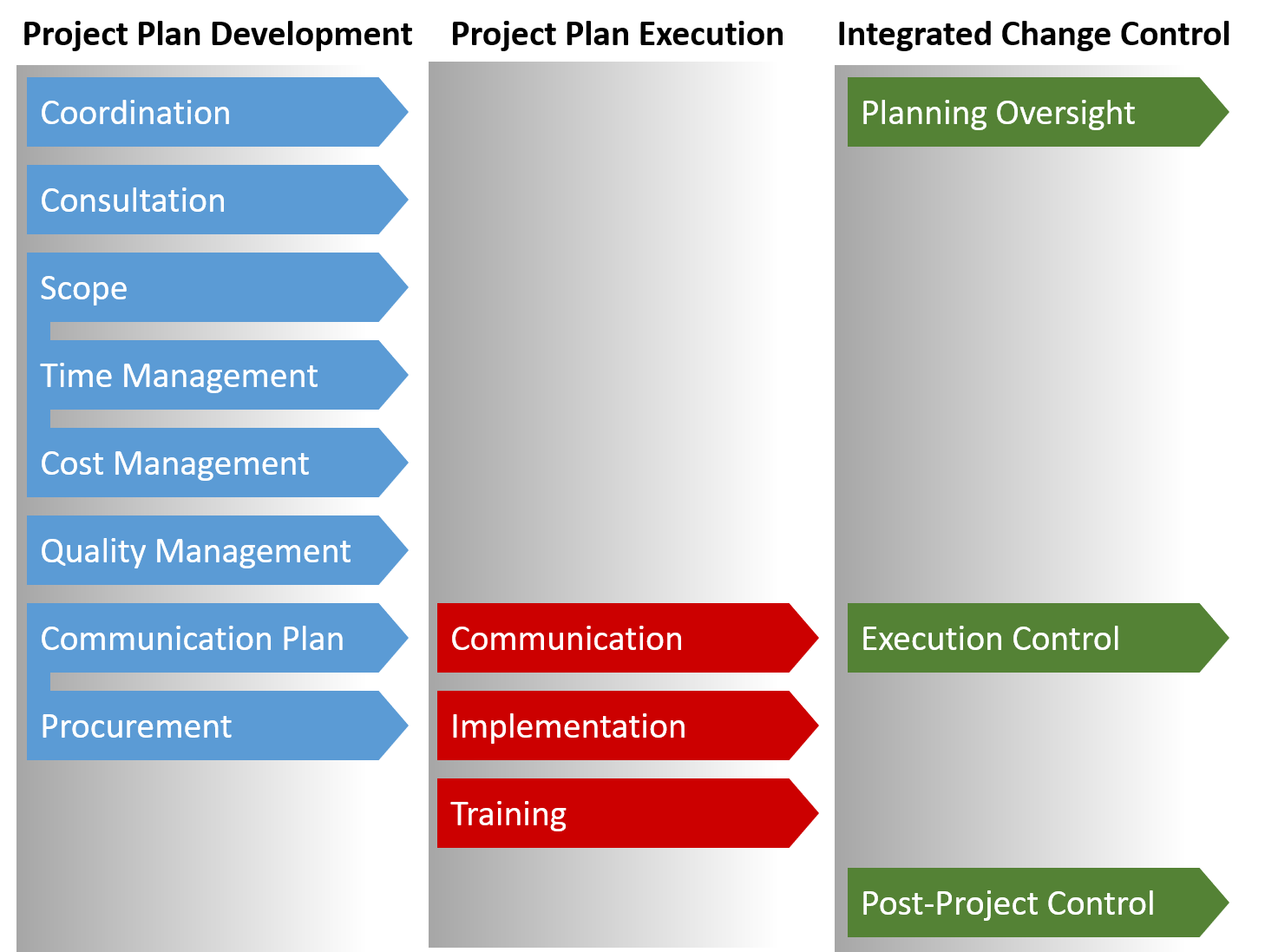

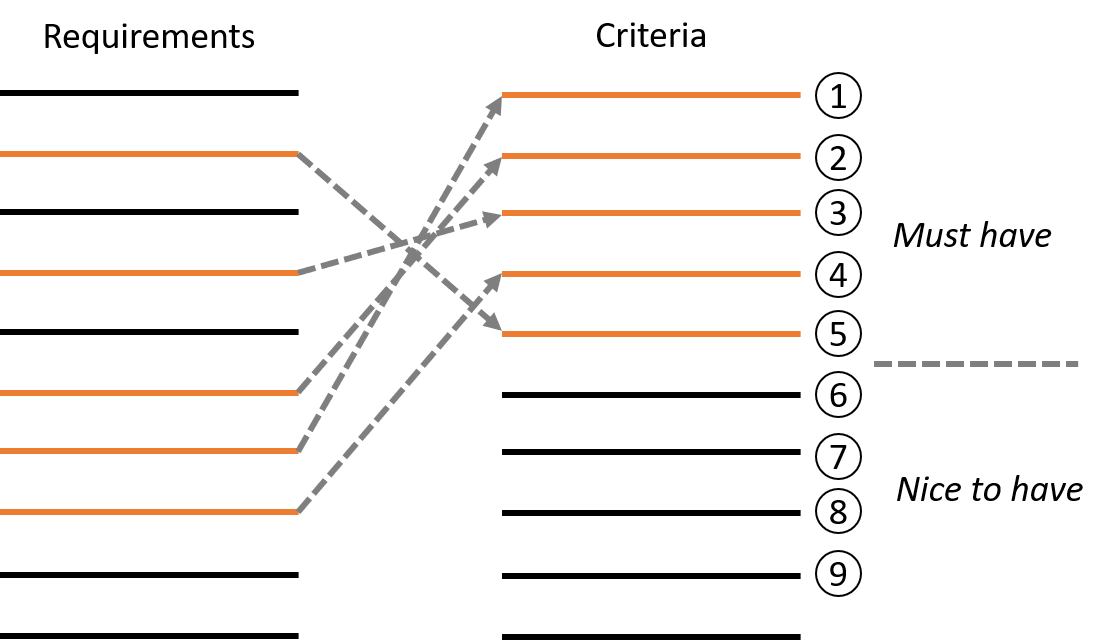

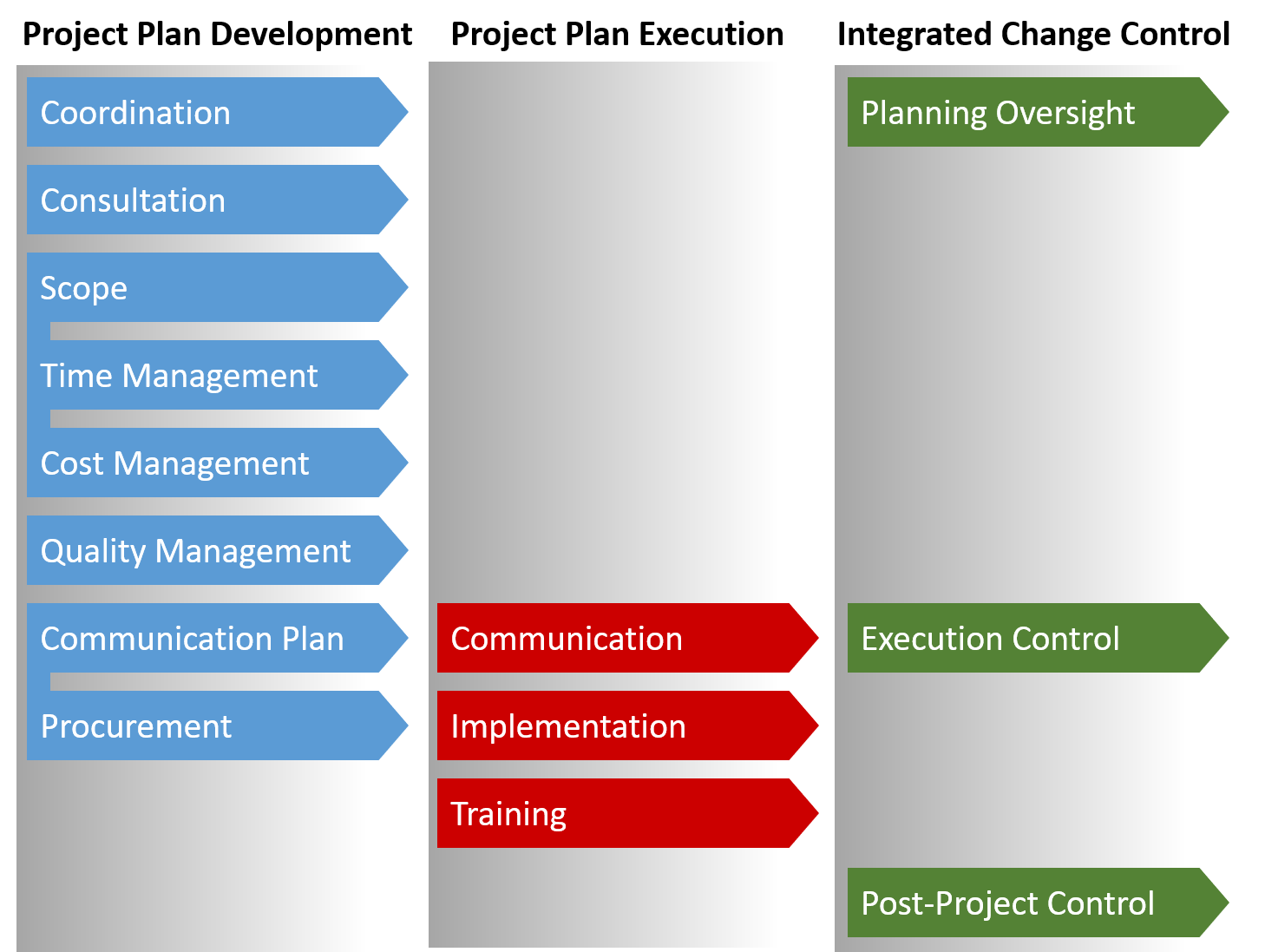

Once there is trust in systems, teachers are more likely to see the IT Team as partners in change. I’ve written about project management in schools previously, so I won’t repeat that here. To summarise, good EdTech project management requires planning, change control and execution. This approach can be scaled down for smaller projects, but for significant user-facing systems, like an LMS or SIS. Following such an approach greatly increases the chance of success.

From a teacher’s perspective, an unsuccessful technological change is one that causes them to change their teaching practices. If teachers need to recreate resources and lessons, resentment towards the system will build. If a new system doesn’t support a particular function efficiently, it will be seen as unsuccessful, even with gains elsewhere.

Ultimately, the target is to bring about suitable systems that teachers feel they own. Realistic expectations are important before they use the system. Successful IT change occurs when the following are in place.

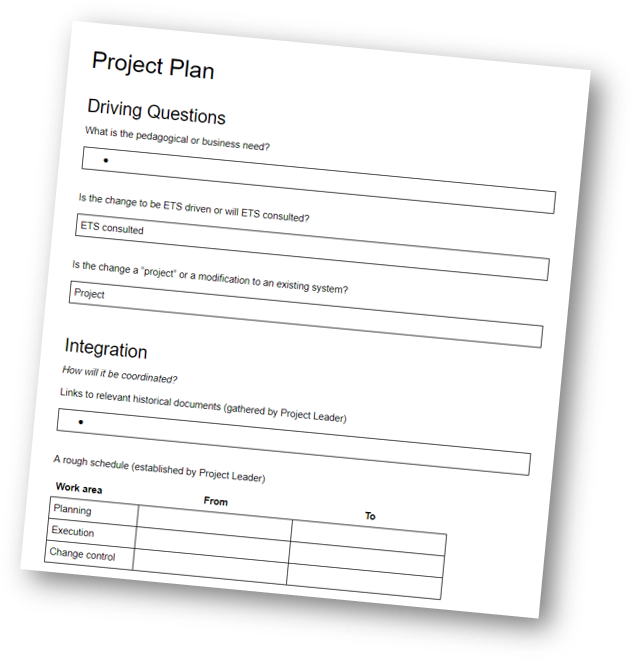

- A scalable, easy-to-follow change process with tangible instruments, such as planning templates, gives teachers confidence to be involved.

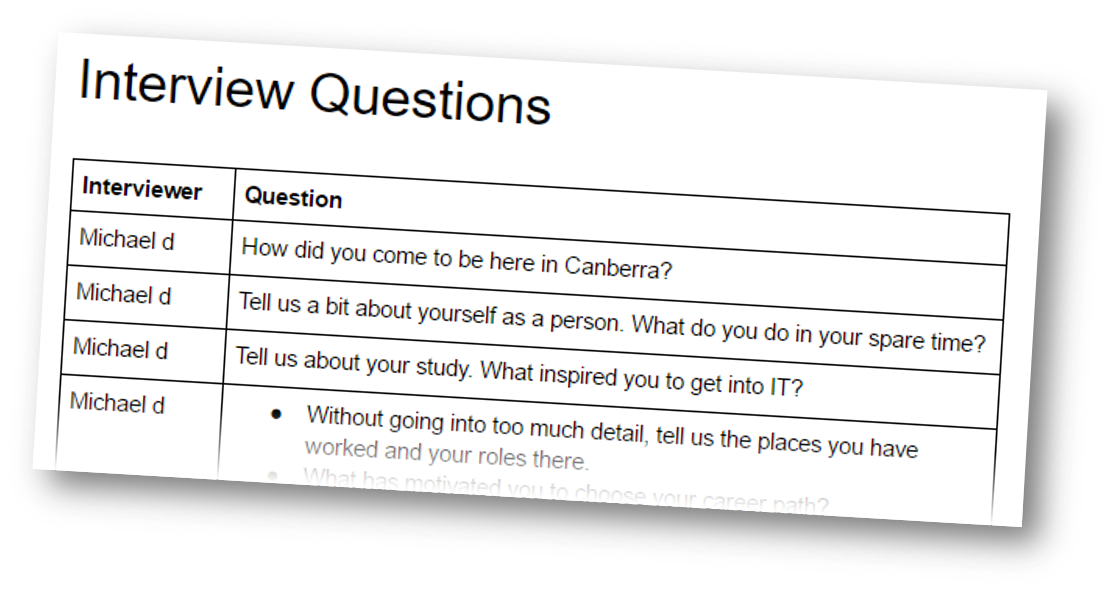

- Consultation to with key stakeholders to develop appropriate requirements, which leads to a sense of user ownership.

- Encourage system owners to become system champions, keen to promote systems that benefit teaching staff.

- Communication that sets expectations of benefits for staff, so when change comes they will pay attention.

Improved Student Outcomes

With trusted systems in place and a number of successful change projects completed, the relationship between teachers and the IT Team can develop into one that allows collaboration on teacher’s primary goal: improved student outcomes.

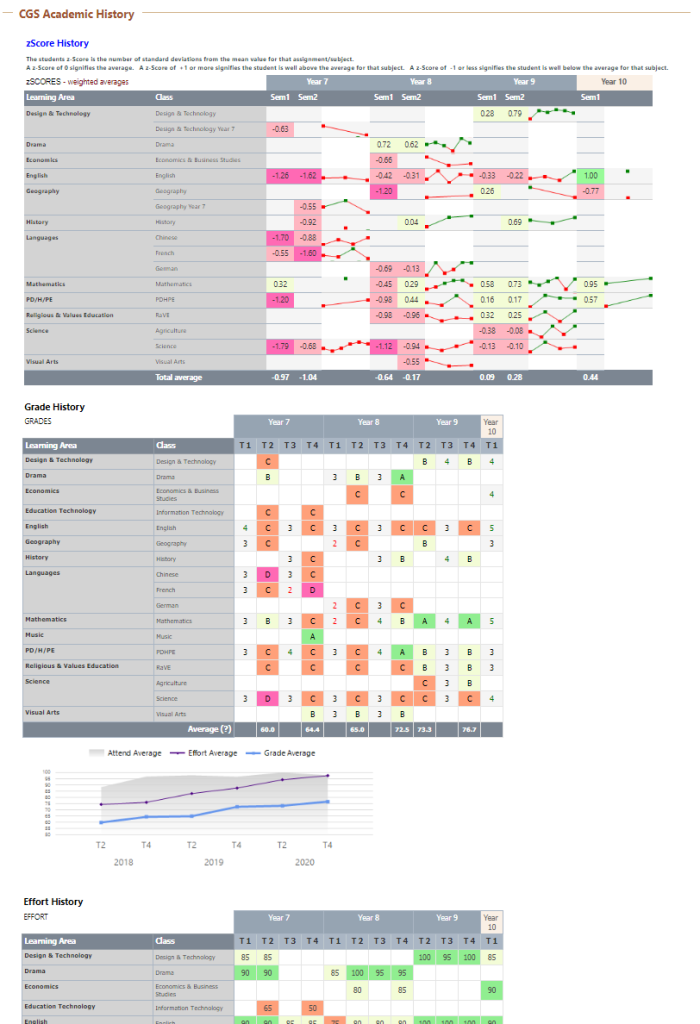

Outcomes include student academic grades, but are not limited to those. Student mood and other pastoral measures are also outcomes teachers work with students to improve. Attendance is the best predictor of success for most students and is something that can be improved.

Often the impact from IT comes in the form of improvements to workflows, processes and interfaces for accessing information. These can be used by teachers to affect student outcomes by seeing students within their cohort and individually. Differentiated teaching for personalised learning happens when it is well informed by data. Sometimes, teachers are just trying to get to know students more objectively around the start of the teaching period or when writing reports.

The relationship between the IT Team and teachers may start with the provision of data for analysis, then pre-defined reports and eventually analytics. The distinction of analytics over lesser presentations of data is that they are predictive, proactive and available on demand. I’ve written about analytics in schools previously.

To achieve successful collaboration on improved student outcomes, concentrate on the following.

- Seek questions that teachers need answered, and an understanding of what data is available to answer them.

- Develop integrations and data warehouses that bring data together, so it can be presented efficiently, but also to achieve active alerting when threshold values are detected.

- Understand how data can be used to benefit student outcomes; providing masses of reports will only overwhelm users. Linking proactive alerts to well presentation information means teachers can be drawn in and use systems, applying the information to practical improvements for students.

Beyond?

To be honest, I’m not sure if teachers have expectations beyond the levels described above. I have ideas for evolving the nature of teaching through student models, knowledge profiling and automated individualised learning schedules. But that requires a relatively radical departure from the current classroom situation, so I’m not sure teachers want that.

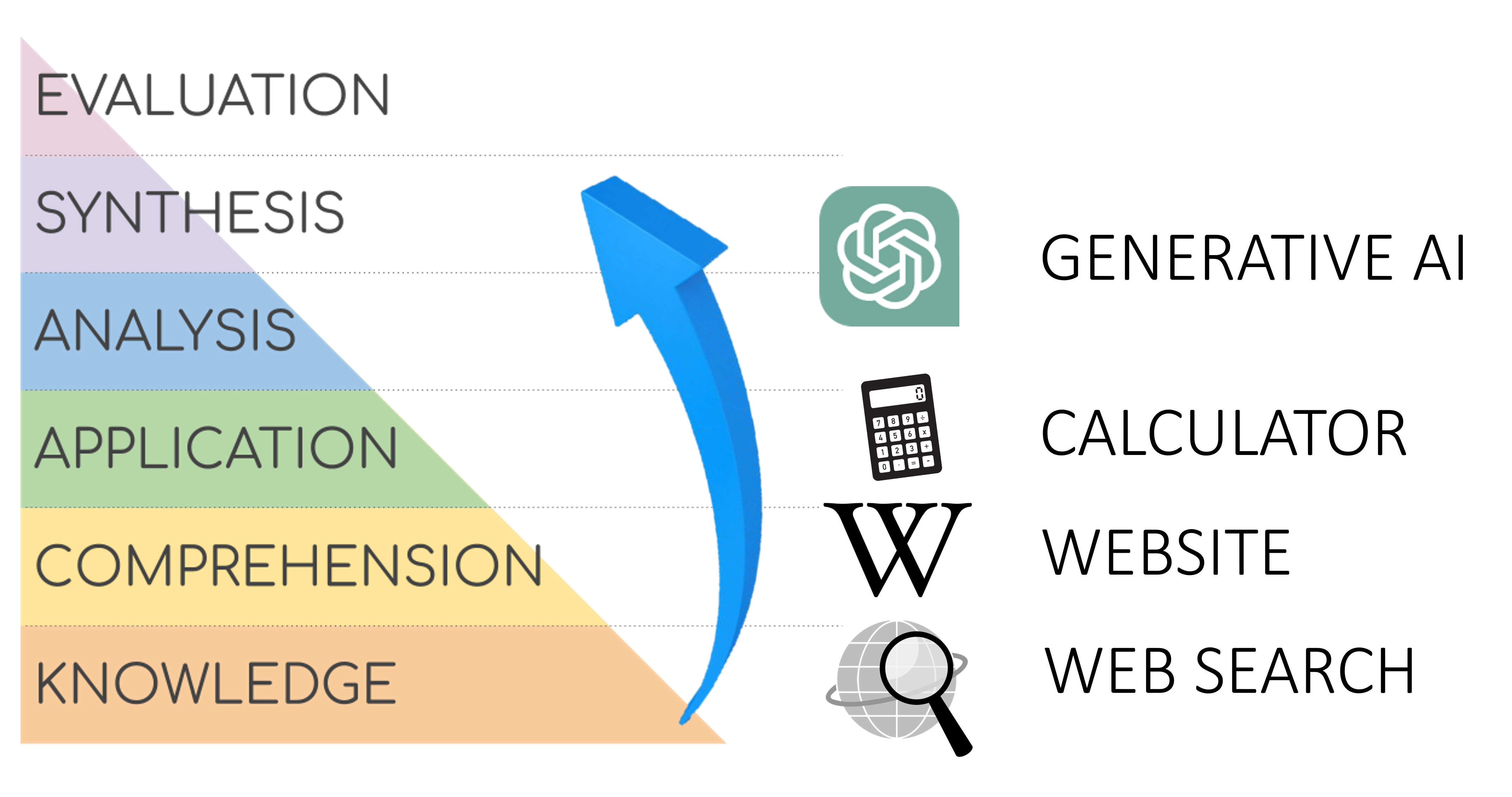

I hesitate to mention the notion of AI as an agent that will satisfy teachers’ desires beyond what I’ve described. I am seeing examples of Generative AI being used to take raw lesson plans and creating more personalised versions based on student interests, but time will tell if that is useful for more than adding engaging variety.

I may have to revisit this post again if I reach that top and can see beyond.